Concept

VR/AR streaming works by rendering complex and demanding scenes on powerful edge servers, then streaming the video over a network or the internet to mobile devices or VR headsets. The video stream is rendered locally as an immersive environment. This is an alternative to local rendering in which the environment is rendered using built-in hardware. This form of remote rendering has both benefits and downsides:

- Pro: Uses less battery due to the simplified user client

- Pro: Allows for more compact VR/AR headsets

- Pro: Allows for much more graphically intensive scenes to be rendered

- Con: Introduces an inherent latency due to high bandwidth requirements and video encoding/decoding

- Con: Bad video quality and artifacting depending on the chosen codec

What does 5G bring to the table?

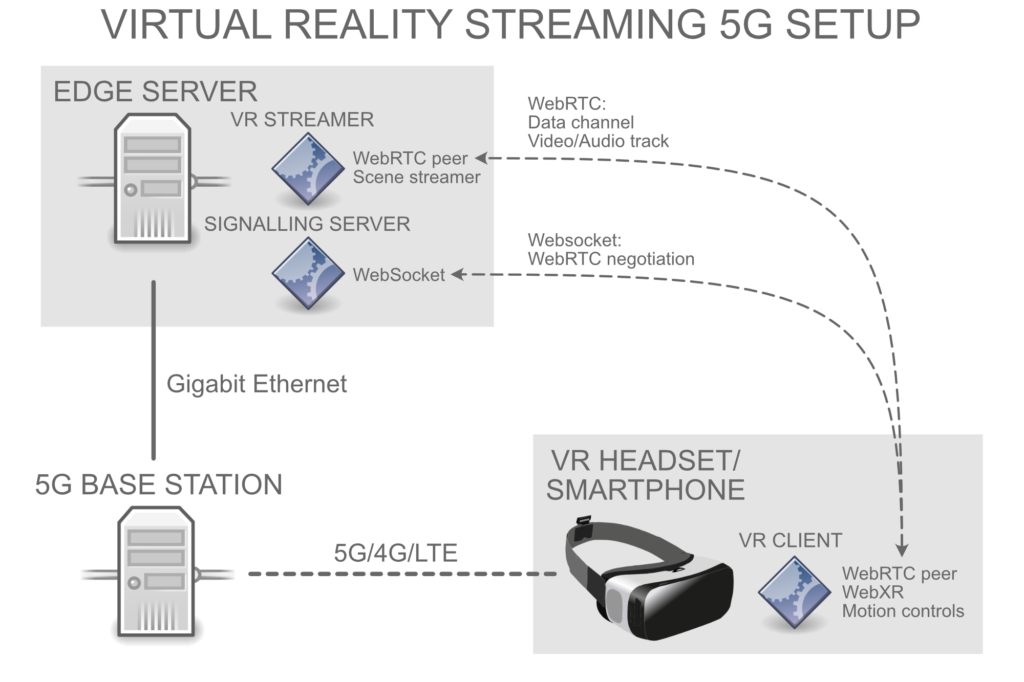

This technology may become particularly relevant in the future as VR/AR headsets with built-in 5G modems become more common place. 5G technology brings numerous advantages to VR/XR streaming, mainly stemming from its higher data transmission rates, lower latency, and massive machine type communication (mMTC) capabilities.

One of the primary challenges in VR/XR streaming is the demand for high bandwidth to handle the extensive data associated with rich, detailed, and interactive virtual environments. 5G, with its impressive data transmission capabilities, is particularly well-suited to handle this challenge.

The low latency of 5G networks is another significant advantage. In VR/XR experiences, even minor delays between a user’s actions and the system’s response can disrupt the sense of immersion and can sometimes lead to discomfort or nausea, a phenomenon known as ‘motion sickness’.

Finally, 5G’s mMTC capabilities allow for high-density connections, supporting up to a million devices per square kilometer. This is particularly beneficial for large-scale or public VR/XR experiences, where potentially thousands of users might be interacting with the system simultaneously.

Use case

As part of the 5G Hub Vaasa project, we created a use case to exemplify the potential of XR streaming over a 5G connection. This demonstration required the creation of several interconnected components.

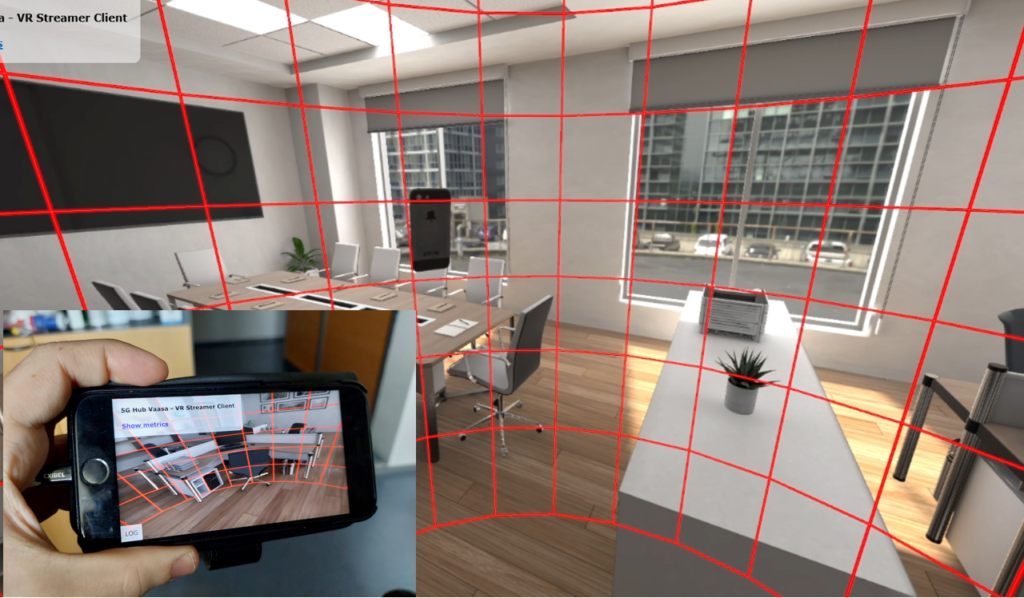

The first component is a dedicated server application using Unity, a popular platform for 3D content creation. The server is tasked with rendering a virtual environment in real-time. By leveraging Unity’s powerful 3D capabilities, we’re rendering a visually impressive looking virtual office in which the user can freely look around. We intentionally designed a challenging and demanding scenario to showcase the capabilities of XR streaming as an alternative to local rendering.

The selected codec for our WebRTC setup is the efficient h265. The codec comes with built-in hardware encoding and decoding support on most devices, allowing for a faster and less demanding experience on low-end hardware.

On the client side we built an web application using Javascript. This app capitalizes on a suite of widely-used web APIs to render the server-generated XR environments on the user’s device. The Gyroscope or WebXR API is used to relay the mobile device’s sensor data to the unity server. This back-and-forth of positional data and video streaming enables real-time delivery and display of XR content, streamed over a 5G or online connection.

The final component is a Javascript-based dashboard created to plot and log WebRTC streaming metrics. The dashboard uses the Chart.js framework for graph rendering.

Network setup

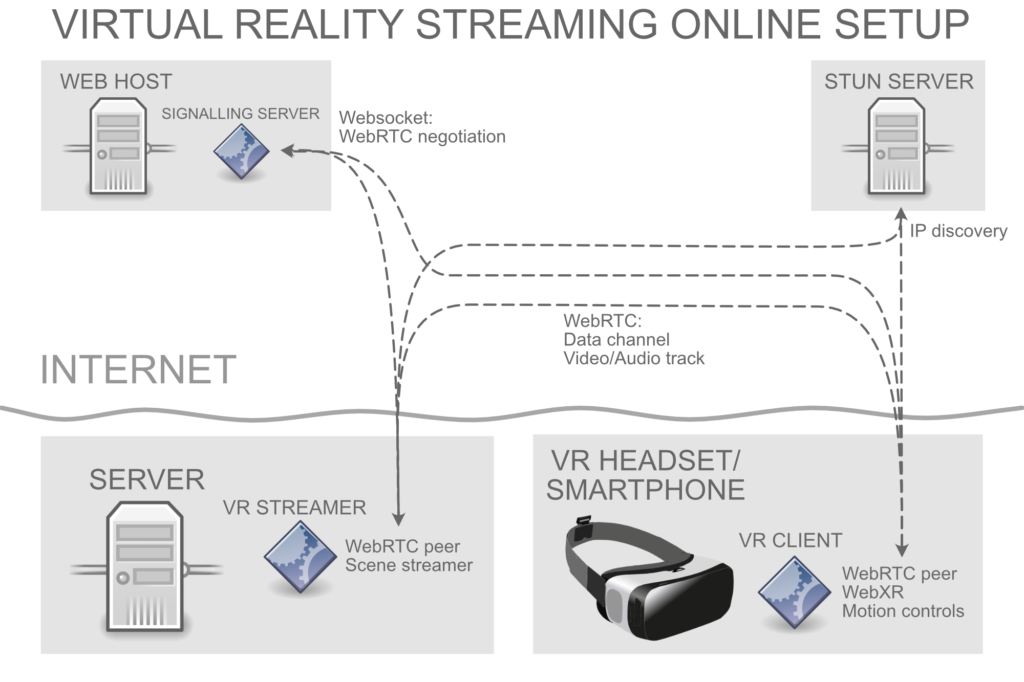

Two distinct configurations were established in our setup, depending on whether the stream operates over the 5G network or the broader internet.

For an online setup involving streaming over the internet, the use of a STUN/TURN server is required. This server serves a critical function, enabling two WebRTC clients that are positioned behind NAT or firewalls to connect. It negotiates the maze of internet connections to establish the most efficient communication path between two devices. In contrast, a STUN server might not be necessary when operating on a local network, due to the absence of similar networking complexities.

Furthermore, initiating a connection between two WebRTC clients requires a signaling server. This server facilitates the initial handshake, an exchange of information that setups the WebRTC connection on both sides. The signaling server is free-form and could utilize any communication method that allows this initial data exchange.

Our WebRTC connection employs multiple channels. A video channel streams low-latency video. A WebRTC data channel is used to exchange live positional data and screen specifications. A WebRTC data channel can transmit any kind of binary information such as text. Although WebRTC supports audio channels, our current setup does not necessitate such a channel.

Dashboard

A dashboard was created for displaying WebRTC metrics and logging statistics related to package loss, latency and jitter buffer delay. These are standard metrics provided by the WebRTC statistics API. Low-level network traffic can be logged using the 5G dashboard or Wireshark.